External Meta-Analytic Resources

Contents

External Meta-Analytic Resources¶

# First, import the necessary modules and functions

import os

from pprint import pprint

from repo2data.repo2data import Repo2Data

# Install the data if running locally, or points to cached data if running on neurolibre

DATA_REQ_FILE = os.path.abspath("../binder/data_requirement.json")

repo2data = Repo2Data(DATA_REQ_FILE)

data_path = repo2data.install()

data_path = os.path.join(data_path[0], "data")

# Set an output directory for any files generated during the book building process

out_dir = os.path.abspath("../outputs/")

os.makedirs(out_dir, exist_ok=True)

---- repo2data starting ----

/srv/conda/envs/notebook/lib/python3.7/site-packages/repo2data

Config from file :

/home/jovyan/binder/data_requirement.json

Destination:

./../data/nimare-paper

Info : ./../data/nimare-paper already downloaded

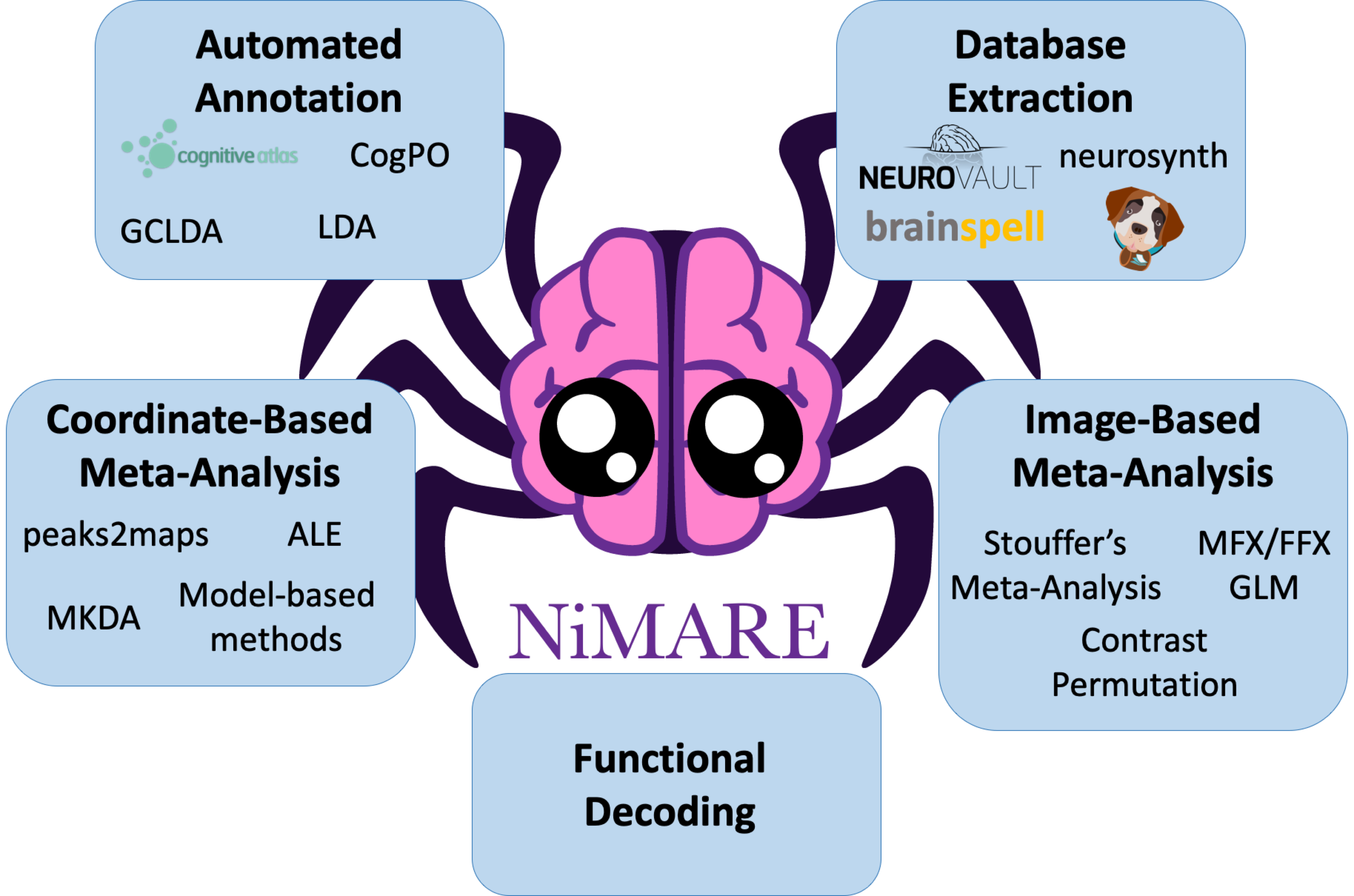

Large-scale meta-analytic databases have made systematic meta-analyses of the neuroimaging literature possible. These databases combine results from neuroimaging studies, whether represented as coordinates of peak activations or unthresholded statistical images, with important study metadata, such as information about the samples acquired, stimuli used, analyses performed, and mental constructs putatively manipulated. The two most popular coordinate-based meta-analytic databases are BrainMap and Neurosynth, while the most popular image-based database is NeuroVault.

The studies archived in these databases may be either manually or automatically annotated—often with reference to a formal ontology or controlled vocabulary. Ontologies for cognitive neuroscience define what mental states or processes are postulated to be manipulated or measured in experiments, and may also include details of said experiments (e.g.,the cognitive tasks employed), relationships between concepts (e.g., verbal working memory is a kind of working memory), and various other metadata that can be standardized and represented in a machine-readable form [Poldrack and Yarkoni, 2016, Poldrack, 2010, Turner and Laird, 2012]. Some of these ontologies are very well-defined, such as expert-generated taxonomies designed specifically to describe only certain aspects of experiments and the relationships between elements within the taxonomy, while others are more loosely defined, in some cases simply building a vocabulary based on which terms are commonly used in cognitive neuroscience articles.

BrainMap¶

BrainMap [Fox et al., 2005, Fox and Lancaster, 2002, Laird et al., 2005] relies on expert annotators to label individual comparisons within studies according to its internally developed ontology, the BrainMap Taxonomy [Fox et al., 2005]. While this approach is likely to be less noisy than an automated annotation method using article text or imaging results to predict content, it is also subject to a number of limitations. First, there are simply not enough annotators to keep up with the ever-expanding literature. Second, any development of the underlying ontology has the potential to leave the database outdated. For example, if a new label is added to the BrainMap Taxonomy, then each study in the full BrainMap database needs to be evaluated for that label before that label can be properly integrated into the database. Finally, a manually annotated database like BrainMap will be biased by which subdomains within the literature are annotated. While outside contributors can add and annotate studies to the database, the main source of annotations has been researchers associated with the BrainMap project.

While BrainMap is a semi-closed resource (i.e., a collaboration agreement is required to access the full database), registered users may search the database using the Sleuth search tool, in order to collect samples for meta-analyses. Sleuth can export these study collections as text files with coordinates. NiMARE provides a function to import data from Sleuth text files into the NiMARE Dataset format.

The function convert_sleuth_to_dataset() can be used to convert text files exported from Sleuth into NiMARE Datasets.

Here, we convert two files from a previous publication by NiMARE contributors [Yanes et al., 2018] into two separate Datasets.

from nimare import io

sleuth_dset1 = io.convert_sleuth_to_dataset(

os.path.join(data_path, "contrast-CannabisMinusControl_space-talairach_sleuth.txt")

)

sleuth_dset2 = io.convert_sleuth_to_dataset(

os.path.join(data_path, "contrast-ControlMinusCannabis_space-talairach_sleuth.txt")

)

print(sleuth_dset1)

print(sleuth_dset2)

# Save the Datasets to files for future use

sleuth_dset1.save(os.path.join(out_dir, "sleuth_dset1.pkl.gz"))

sleuth_dset2.save(os.path.join(out_dir, "sleuth_dset2.pkl.gz"))

INFO:matplotlib.font_manager:generated new fontManager

Dataset(41 experiments, space='ale_2mm')

Dataset(41 experiments, space='ale_2mm')

Neurosynth¶

Neurosynth [Yarkoni et al., 2011] uses a combination of web scraping and text mining to automatically harvest neuroimaging studies from the literature and to annotate them based on term frequency within article abstracts. As a consequence of its relatively crude automated approach, Neurosynth has its own set of limitations. First, Neurosynth is unable to delineate individual comparisons within studies, and consequently uses the entire paper as its unit of measurement, unlike BrainMap. This risks conflating directly contrasted comparisons (e.g., A>B and B>A), as well as comparisons which have no relation to one another. Second, coordinate extraction and annotation are noisy. Third, annotations automatically performed by Neurosynth are also subject to error, although the reasons behind this are more nuanced and will be discussed later in this paper. Given Neurosynth’s limitations, we recommend that it be used for casual, exploratory meta-analyses rather than for publication-quality analyses. Nevertheless, while individual meta-analyses should not be published from Neurosynth, many derivative analyses have been performed and published (e.g., [Chang et al., 2013, de la Vega et al., 2016, de la Vega et al., 2018, Poldrack et al., 2012]). As evidence of its utility, Neurosynth has been used to define a priori regions of interest (e.g., [Josipovic, 2014, Zeidman et al., 2012, Wager et al., 2013]) or perform meta-analytic functional decoding (e.g., [Chen et al., 2018, Pantelis et al., 2015, Tambini et al., 2017]) in many first-order (rather than meta-analytic) fMRI studies.

Here, we show code that would download the Neurosynth database from where it is stored (https://github.com/neurosynth/neurosynth-data) and convert it to a NiMARE Dataset using fetch_neurosynth(), for the first step, and convert_neurosynth_to_dataset(), for the second.

from nimare import extract

# Download the desired version of Neurosynth from GitHub.

files = extract.fetch_neurosynth(

data_dir=data_path,

version="7",

source="abstract",

vocab="terms",

overwrite=False,

)

pprint(files)

neurosynth_db = files[0]

INFO:nimare.extract.utils:Dataset found in ./../data/nimare-paper/data/neurosynth

INFO:nimare.extract.extract:Searching for any feature files matching the following criteria: [('source-abstract', 'vocab-terms', 'data-neurosynth', 'version-7')]

Downloading data-neurosynth_version-7_coordinates.tsv.gz

File exists and overwrite is False. Skipping.

Downloading data-neurosynth_version-7_metadata.tsv.gz

File exists and overwrite is False. Skipping.

Downloading data-neurosynth_version-7_vocab-terms_source-abstract_type-tfidf_features.npz

File exists and overwrite is False. Skipping.

Downloading data-neurosynth_version-7_vocab-terms_vocabulary.txt

File exists and overwrite is False. Skipping.

[{'coordinates': '/home/jovyan/data/nimare-paper/data/neurosynth/data-neurosynth_version-7_coordinates.tsv.gz',

'features': [{'features': '/home/jovyan/data/nimare-paper/data/neurosynth/data-neurosynth_version-7_vocab-terms_source-abstract_type-tfidf_features.npz',

'vocabulary': '/home/jovyan/data/nimare-paper/data/neurosynth/data-neurosynth_version-7_vocab-terms_vocabulary.txt'}],

'metadata': '/home/jovyan/data/nimare-paper/data/neurosynth/data-neurosynth_version-7_metadata.tsv.gz'}]

Note

Converting the large Neurosynth and NeuroQuery datasets to NiMARE Dataset objects can be a very memory-intensive process.

For the sake of this book, we show how to perform the conversions below, but actually load and use pre-converted Datasets.

# Convert the files to a Dataset.

# This may take a while (~10 minutes)

neurosynth_dset = io.convert_neurosynth_to_dataset(

coordinates_file=neurosynth_db["coordinates"],

metadata_file=neurosynth_db["metadata"],

annotations_files=neurosynth_db["features"],

)

print(neurosynth_dset)

# Save the Dataset for later use.

neurosynth_dset.save(os.path.join(out_dir, "neurosynth_dataset.pkl.gz"))

Here, we load a pre-generated version of the Neurosynth Dataset.

from nimare import dataset

neurosynth_dset = dataset.Dataset.load(os.path.join(data_path, "neurosynth_dataset.pkl.gz"))

print(neurosynth_dset)

Dataset(14371 experiments, space='mni152_2mm')

Note

Many of the methods in NiMARE can be very time-consuming or memory-intensive.

Therefore, for the sake of ensuring that the analyses in this article may be reproduced by as many people as possible, we will use a reduced version of the Neurosynth Dataset, only containing the first 500 studies, for those methods which may not run easily on the full database.

neurosynth_dset_first_500 = neurosynth_dset.slice(neurosynth_dset.ids[:500])

print(neurosynth_dset)

# Save this Dataset for later use.

neurosynth_dset_first_500.save(os.path.join(out_dir, "neurosynth_dataset_first500.pkl.gz"))

Dataset(14371 experiments, space='mni152_2mm')

In addition to a large corpus of coordinates, Neurosynth provides term frequencies derived from article abstracts that can be used as annotations.

One additional benefit to Neurosynth is that it has made available the coordinates for a large number of studies for which the study abstracts are also readily available. This has made the Neurosynth database a common resource upon which to build other automated ontologies. Data-driven ontologies which have been developed using the Neurosynth database include the generalized correspondence latent Dirichlet allocation (GCLDA) [Rubin et al., 2017] topic model and Deep Boltzmann machines [Monti et al., 2016].

NeuroQuery¶

A related resource is NeuroQuery [Dockès et al., 2020]. NeuroQuery is an online service for large-scale predictive meta-analysis. Unlike Neurosynth, which performs statistical inference and produces statistical maps, NeuroQuery is a supervised learning model and produces a prediction of the brain areas most likely to contain activations. These maps predict locations where studies investigating a given area (determined by the text prompt) are likely to produce activations, but they cannot be used in the same manner as statistical maps from a standard coordinate-based meta-analysis. In addition to this predictive meta-analytic tool, NeuroQuery also provides a new database of coordinates, text annotations, and metadata via an automated extraction approach that improves on Neurosynth’s original methods.

While NiMARE does not currently include an interface to NeuroQuery’s predictive meta-analytic method, there are functions for downloading the NeuroQuery database and converting it to NiMARE format, much like Neurosynth.

The functions for downloading the NeuroQuery database and converting it to a Dataset are fetch_neuroquery() and convert_neurosynth_to_dataset(), respectively.

We are able to use the same function for converting the database to a Dataset for NeuroQuery as Neurosynth because both databases store their data in the same structure.

# Download the desired version of NeuroQuery from GitHub.

files = extract.fetch_neuroquery(

data_dir=data_path,

version="1",

source="combined",

vocab="neuroquery6308",

type="tfidf",

overwrite=False,

)

pprint(files)

neuroquery_db = files[0]

INFO:nimare.extract.utils:Dataset found in ./../data/nimare-paper/data/neuroquery

INFO:nimare.extract.extract:Searching for any feature files matching the following criteria: [('source-combined', 'vocab-neuroquery6308', 'type-tfidf', 'data-neuroquery', 'version-1')]

Downloading data-neuroquery_version-1_coordinates.tsv.gz

File exists and overwrite is False. Skipping.

Downloading data-neuroquery_version-1_metadata.tsv.gz

File exists and overwrite is False. Skipping.

Downloading data-neuroquery_version-1_vocab-neuroquery6308_source-combined_type-tfidf_features.npz

File exists and overwrite is False. Skipping.

Downloading data-neuroquery_version-1_vocab-neuroquery6308_vocabulary.txt

File exists and overwrite is False. Skipping.

[{'coordinates': '/home/jovyan/data/nimare-paper/data/neuroquery/data-neuroquery_version-1_coordinates.tsv.gz',

'features': [{'features': '/home/jovyan/data/nimare-paper/data/neuroquery/data-neuroquery_version-1_vocab-neuroquery6308_source-combined_type-tfidf_features.npz',

'vocabulary': '/home/jovyan/data/nimare-paper/data/neuroquery/data-neuroquery_version-1_vocab-neuroquery6308_vocabulary.txt'}],

'metadata': '/home/jovyan/data/nimare-paper/data/neuroquery/data-neuroquery_version-1_metadata.tsv.gz'}]

# Convert the files to a Dataset.

# This may take a while (~10 minutes)

neuroquery_dset = io.convert_neurosynth_to_dataset(

coordinates_file=neuroquery_db["coordinates"],

metadata_file=neuroquery_db["metadata"],

annotations_files=neuroquery_db["features"],

)

print(neuroquery_dset)

# Save the Dataset for later use.

neuroquery_dset.save(os.path.join(out_dir, "neuroquery_dataset.pkl.gz"))

Here, we load a pre-generated version of the NeuroQuery Dataset.

neuroquery_dset = dataset.Dataset.load(os.path.join(data_path, "neuroquery_dataset.pkl.gz"))

print(neuroquery_dset)

Dataset(13459 experiments, space='mni152_2mm')

NeuroVault¶

NeuroVault [Gorgolewski et al., 2015] is a public repository of user-uploaded, whole-brain, unthresholded brain maps.

Users may associate their image collections with publications, and can annotate individual maps with labels from the Cognitive Atlas, which is the ontology of choice for NeuroVault.

NiMARE includes a function, convert_neurovault_to_dataset(), with which users can search for images in NeuroVault, download those images, and convert them into a Dataset object.